Which of the following risks is MOST likely to be accepted as a result of transferring business to a single CSP?

Vendor lock-in

The inability to scale

Data breach due to a break-in

Loss of equipment due to a natural disaster

Vendor lock-in is a situation where a customer becomes dependent on a single cloud service provider (CSP) and cannot easily switch to another vendor without substantial cost, technical incompatibility, or legal constraints1. Vendor lock-in is a risk that is most likely to be accepted as a result of transferring business to a single CSP, because it may offer some benefits such as lower prices, higher performance, or better integration. However, vendor lock-in also has some drawbacks, such as reduced flexibility, increased dependency, and limited innovation2. Therefore, customers should carefully weigh the pros and cons of vendor lock-in before choosing a CSP and try to avoid or mitigate it by using open standards, multi-cloud strategies, or contractual agreements3. References: What is vendor lock-in? | Vendor lock-in and cloud computing; What Is Cloud Vendor Lock-In (And How To Break Free)? - CAST AI; CompTIA Cloud Essentials+ CLO-002 Study Guide, Chapter 3: Cloud Computing Concepts, page 97.

Which of the following cloud migration methods would be BEST suited for disaster recovery scenarios?

Replatforming

Phased

Rip and replace

Lift and shift

Lift and shift is a cloud migration method that involves moving an application or workload from one environment to another without making any significant changes to its architecture, configuration, or code. Lift and shift is also known as rehosting or forklifting. Lift and shift is best suited for disaster recovery scenarios because it allows for a fast and simple migration of applications or workloads to the cloud in case of a disaster or disruption in the original environment. Lift and shift can also reduce the risk of errors or compatibility issues during the migration process, as the application or workload remains unchanged. Lift and shift can also leverage the cloud’s scalability, availability, and security features to improve the performance and resilience of the application or workload. However, lift and shift may not take full advantage of the cloud’s native capabilities and services, and may incur higher operational costs due to the maintenance of the legacy infrastructure and software. Therefore, lift and shift may not be the best option for long-term or strategic cloud migration, but rather for short-term or tactical cloud migration for disaster recovery purposes. Replatforming, phased, and rip and replace are not the best cloud migration methods for disaster recovery scenarios, as they involve more changes and complexity to the application or workload, which may increase the migration time and risk. Replatforming is a cloud migration method that involves making some modifications to the application or workload to optimize it for the cloud environment, such as changing the operating system, database, or middleware. Replatforming is also known as replatforming or refactoring. Replatforming can improve the performance and efficiency of the application or workload in the cloud, but it may also introduce some challenges and costs, such as testing, debugging, and licensing. Phased is a cloud migration method that involves moving an application or workload to the cloud in stages or increments, rather than all at once. Phased is also known as iterative or hybrid. Phased can reduce the impact and risk of the migration process, as it allows for testing, feedback, and adjustment along the way. However, phased can also prolong the migration time and effort, as it requires more coordination and integration between the source and target environments. Rip and replace is a cloud migration method that involves discarding the existing application or workload and building a new one from scratch in the cloud, using cloud-native technologies and services. Rip and replace is also known as rebuild or cloud-native. Rip and replace can maximize the benefits and potential of the cloud, but it may also entail the highest cost and complexity, as it requires a complete redesign and redevelopment of the application or workload. References: CompTIA Cloud Essentials+ CLO-002 Study Guide, Chapter 7: Cloud Migration, Section 7.2: Cloud Migration Methods, Page 2111 and Cloud Migration Strategies: A Guide to Moving Your Infrastructure | Rackspace Technology

A Chief Information Officer is starting a cloud migration plan for the next three years of growth and requires an understanding of IT initiatives. Which of the following will assist in the assessment?

Technical gap analysis

Cloud architecture diagram review

Current and future business requirements

Feasibility study

A Chief Information Officer (CIO) who is starting a cloud migration plan for the next three years of growth and requires an understanding of IT initiatives should consider the current and future business requirements as a key factor in the assessment. Current and future business requirements are the needs and expectations of the organization and its stakeholders regarding the IT systems and services that support the business goals and processes. These requirements may include functional, non-functional, technical, operational, financial, regulatory, and strategic aspects of the IT systems and services. Understanding the current and future business requirements can help the CIO to:

A technical gap analysis, a cloud architecture diagram review, and a feasibility study are also important steps in the cloud migration assessment, but they are not as comprehensive as the current and future business requirements. A technical gap analysis is a process of comparing the current state of the IT systems and services with the desired state in the cloud, and identifying the gaps or differences between them. A technical gap analysis can help the CIO to understand the compatibility, performance, and integration issues that may arise during the cloud migration, and to plan the necessary changes or improvements to address them. A cloud architecture diagram review is a process of examining the design and structure of the cloud environment, and how the IT systems and services will be deployed, configured, and managed in the cloud. A cloud architecture diagram review can help the CIO to ensure that the cloud environment meets the technical, functional, and non-functional requirements of the IT systems and services, and that it follows the best practices and standards of the cloud provider. A feasibility study is a process of evaluating the technical, financial, operational, and organizational aspects of moving from on-premises IT systems and services to cloud-based alternatives. A feasibility study can help the CIO to determine the viability and desirability of the cloud migration, and to weigh the pros and cons of different cloud migration approaches.

References: Cloud Migration Checklist: 17 Steps to Future-Proof Your Business, 17 Steps to a Successful Cloud Migration. What business needs to know before a cloud migration - PwC, 4 Considerations for your Business Needs. Planning for a successful cloud migration | Google Cloud Blog, For each application that you want to move, the migration factory approach takes an end-to-end view of the project, including. Assess workloads and validate assumptions before migration, As a result, before migrating a workload to the cloud it’s critical to assess the individual assets associated with that workload for their migration suitability. Migration environment planning checklist - Cloud Adoption Framework …, As an initial step in the migration process, you need to create the right environment in the cloud to receive, host, and support migrating assets. Navigating Success: The Crucial Role of Feasibility Studies in SAP …, In the context of SAP cloud migration, a feasibility study is a comprehensive assessment that evaluates the technical, financial, operational, and organizational aspects of moving from on-premises SAP solutions to cloud-based alternatives.

Due to local natural disaster concerns, a cloud customer is transferring all of its cold storage data to servers in a safer geographic region. Which of the following risk response techniques is the cloud customer employing?

Avoidance

Transference

Mitigation

Acceptance

Avoidance is a risk response technique that involves changing the project plan to eliminate the risk or protect the project objectives from its impact. Avoidance can be done by modifying the scope, schedule, cost, or quality of the project. Avoidance is usually the most effective way to deal with a risk, but it may not always be possible or desirable. In this case, the cloud customer is transferring all of its cold storage data to servers in a safer geographic region, which means they are changing the location of their data storage to avoid the risk of a natural disaster affecting their data. This way, they are eliminating the possibility of losing their data due to a natural disaster in their original region. This is an example of avoidance as a risk response technique. References: CompTIA Cloud Essentials+ CLO-002 Study Guide, Chapter 4: Cloud Security, Section 4.2: Cloud Security Concepts, Page 153. 5 Risk Response Strategies - ProjectEngineer1

A document that outlines the scope of a project, specific deliverables, scheduling, and additional specific details from the client/buyer is called a:

statement of work.

standard operating procedure.

master service document.

service level agreement.

A statement of work (SOW) is a document that outlines the scope of a project, specific deliverables, scheduling, and additional specific details from the client/buyer1. A SOW defines what the service provider will do for the client and how they will do it, as well as the expected outcomes and quality standards2. A SOW is typically used as a supplement to a master service agreement (MSA) or a contract that establishes the general terms and conditions of the business relationship3.

References:

A business analyst asked for an RFI from a public CSP. The analyst wants to assess the financial aspects of a potential contract. Which of the following should the analyst expect to see in the RFI?

Time to market

Fixed costs

Training

Capital expenditures

A request for information (RFI) is a tool used by procurement teams to understand the options available for solving a problem or completing a task. Suppliers respond to RFIs with information about their products and services1. An RFI can inform buyers about the size, operation, experience, and products of potential suppliers2. A business analyst who asked for an RFI from a public cloud service provider (CSP) would want to assess the financial aspects of a potential contract. One of the financial aspects that the analyst should expect to see in the RFI is the fixed costs of the cloud service. Fixed costs are the costs that do not vary with the amount of resources or services consumed by the buyer. Fixed costs can include setup fees, subscription fees, maintenance fees, or support fees. Fixed costs can help the analyst to compare the prices of different cloud service providers and to plan the budget for the cloud project. Fixed costs can also affect the return on investment (ROI) and the total cost of ownership (TCO) of the cloud service3. The other options are not financial aspects that the analyst should expect to see in the RFI. Time to market, training, and capital expenditures are not relevant to the cloud service provider, but to the buyer. Time to market is the time it takes for the buyer to launch a product or service using the cloud. Training is the cost of educating the buyer’s staff on how to use the cloud service. Capital expenditures are the costs of acquiring or upgrading physical assets, such as servers or hardware, for the cloud project. These are not information that the cloud service provider would provide in the RFI, but information that the buyer would need to consider in the procurement process. References: RFIs: The Simple Guide to Writing a Request for Information - HubSpot Blog, What is the difference between RFI, RFQ, RFT and RFP? | LawBite, CompTIA Cloud Essentials+ CLO-002 Study Guide, Chapter 1: Cloud Principles and Design, Section 1.3: Cloud Business Principles, Page 29.

A manufacturing company is selecting applications for a cloud migration. The company’s main concern relates to the ERP system, which needs to receive data from multiple industrial systems to generate the executive reports. Which of the following will provide the details needed for the company’s decision regarding the cloud migration?

Standard operating procedures

Feasibility studies

Statement of work

Benchmarks

Feasibility studies are the best option to provide the details needed for the company’s decision regarding the cloud migration. Feasibility studies are comprehensive assessments that evaluate the technical, financial, operational, and organizational aspects of moving an application or workload from one environment to another. Feasibility studies can help determine the suitability, viability, and benefits of migrating an application or workload to the cloud, as well as the challenges, risks, and costs involved. Feasibility studies can also help identify the best cloud solution and migration method for the application or workload, based on its requirements, dependencies, and characteristics. In the context of the manufacturing company, a feasibility study can help analyze the ERP system and its data sources, and provide information on how to migrate it to the cloud without compromising its functionality, performance, security, or compliance. A feasibility study can also help compare the cloud migration options with the current on-premises solution, and estimate the return on investment and the total cost of ownership of the cloud migration. Therefore, feasibility studies can provide the details needed for the company’s decision regarding the cloud migration. Standard operating procedures, statement of work, and benchmarks are not the best options to provide the details needed for the company’s decision regarding the cloud migration, as they have different purposes and scopes. Standard operating procedures are documents that describe the steps and tasks involved in performing a specific process or activity, such as installing, configuring, or troubleshooting an application or workload. Standard operating procedures can help ensure consistency, quality, and efficiency in the execution of a process or activity, but they do not provide information on the feasibility or suitability of migrating an application or workload to the cloud. Statement of work is a document that defines the scope, objectives, deliverables, and expectations of a project or contract, such as a cloud migration project or contract. Statement of work can help establish the roles, responsibilities, and expectations of the parties involved in a project or contract, but it does not provide information on the feasibility or viability of migrating an application or workload to the cloud. Benchmarks are tests or measurements that evaluate the performance, quality, or reliability of an application or workload, such as the speed, throughput, or availability of an application or workload. Benchmarks can help compare the performance, quality, or reliability of an application or workload across different environments, such as on-premises or cloud, but they do not provide information on the feasibility or benefits of migrating an application or workload to the cloud. References: CompTIA Cloud Essentials+ CLO-002 Study Guide, Chapter 7: Cloud Migration, Section 7.1: Cloud Migration Concepts, Page 2031 and Navigating Success: The Crucial Role of Feasibility Studies in SAP Cloud Migration | SAP Blogs

An on-premises, business-critical application is used for financial reporting and forecasting. The Chief Financial Officer requests options to move the application to cloud. Which of the following would be BEST to review the options?

Test the applications in a sandbox environment.

Perform a gap analysis.

Conduct a feasibility assessment.

Design a high-level architecture.

A feasibility assessment is a process of evaluating the viability and suitability of moving an on-premises application to the cloud. A feasibility assessment can help identify the benefits, risks, costs, and challenges of cloud migration, as well as the technical and business requirements, constraints, and dependencies of the application. A feasibility assessment can also help compare different cloud service models, deployment models, and providers, and recommend the best option for the application. A feasibility assessment would be the best way to review the options for moving a business-critical application to the cloud.

A gap analysis is a process of identifying the differences between the current and desired state of a system or process. A gap analysis can help determine the gaps in performance, functionality, security, or compliance of an on-premises application and a cloud-based application, and suggest the actions needed to close the gaps. A gap analysis is usually performed after a feasibility assessment, when the cloud migration option has been selected, and before the transition planning phase.

A test is a process of verifying the functionality, performance, security, or compatibility of an application or system. A test can help detect and resolve any errors, bugs, or issues in the application or system, and ensure that it meets the expected standards and specifications. A test can be performed in a sandbox environment, which is an isolated and controlled environment that mimics the real production environment. A test is usually performed during or after the cloud migration process, when the application has been deployed or migrated to the cloud, and before the final release or launch.

A high-level architecture is a conceptual or logical design of an application or system that shows the main components, functions, relationships, and interactions of the application or system. A high-level architecture can help visualize and communicate the structure, behavior, and goals of the application or system, and guide the development and implementation process. A high-level architecture is usually created during the design phase of the cloud migration process, after the feasibility assessment and the gap analysis, and before the development and testing phase. References: CompTIA Cloud Essentials+ CLO-002 Study Guide, page 109-110, 113-114, 117-118, 121-122; CompTIA Cloud Essentials+ Certification Training, CertMaster Learn for Cloud Essentials+, Module 3: Cloud Solutions, Lesson 3.2: Cloud Migration, Topic 3.2.1: Cloud Migration Process

A redundancy option must be provided for an on-premises server cluster. The financial team is concerned about the cost of extending to the cloud. Which of the following resources about the on-premises infrastructure would BEST help to estimate cloud costs?

Server cluster architecture diagram

Compute and storage reporting

Industry benchmarks

Resource management policy

Compute and storage reporting is the best resource to help estimate cloud costs for a redundancy option for an on-premises server cluster. Compute and storage reporting provides information about the current usage and performance of the on-premises servers, such as CPU, memory, disk, network, and I/O metrics. This information can help to determine the appropriate cloud service level and configuration that can match or exceed the on-premises capabilities. Compute and storage reporting can also help to identify any underutilized or overprovisioned resources that can be optimized to reduce costs12

Server cluster architecture diagram is not the best resource to help estimate cloud costs, because it only shows the logical and physical structure of the on-premises server cluster, such as the number, type, and location of the servers, and the connections and dependencies between them. This information can help to understand the high-level design and requirements of the server cluster, but it does not provide enough details about the actual usage and performance of the servers, which are more relevant for cloud cost estimation3

Industry benchmarks are not the best resource to help estimate cloud costs, because they only show the average or standard performance and cost of similar server clusters in the same industry or domain. Industry benchmarks can help to compare and evaluate the on-premises server cluster against the best practices and expectations of the market, but they do not reflect the specific needs and characteristics of the server cluster, which are more important for cloud cost estimation4

Resource management policy is not the best resource to help estimate cloud costs, because it only shows the rules and procedures for managing the on-premises server cluster, such as the roles and responsibilities, the service level agreements, the security and compliance standards, and the backup and recovery plans. Resource management policy can help to ensure the quality and reliability of the server cluster, but it does not provide enough information about the actual usage and performance of the servers, which are more critical for cloud cost estimation5

References: 1: 2: page 48 3: 4: page 50 5: page 52

A cloud administrator wants to ensure nodes are added automatically when the load on a web cluster increases. Which of the following should be implemented?

Autonomous systems

Infrastructure as code

Right-sizing

Autoscaling

Autoscaling is a cloud computing feature that enables organizations to scale cloud services such as server capacities or virtual machines up or down automatically, based on defined situations such as traffic or utilization levels1. Autoscaling helps to ensure that nodes are added automatically when the load on a web cluster increases, and removed when the load decreases, to optimize performance and costs. Autoscaling can be configured using built-in mechanisms or custom implementations, depending on the cloud service and the specific requirements2.

Autonomous systems are networks that are administered by a single entity and have a common routing policy. Autonomous systems are not related to autoscaling, but rather to network connectivity and routing protocols.

Infrastructure as code is a practice of managing and provisioning cloud resources using code or scripts, rather than manual processes or graphical interfaces. Infrastructure as code can help to automate and standardize cloud deployments, but it does not necessarily imply autoscaling, unless the code or scripts include logic for scaling resources based on demand.

Right-sizing is a technique of optimizing cloud resources to match the actual needs and usage patterns of an application or service. Right-sizing can help to reduce costs and improve efficiency, but it does not involve adding or removing nodes automatically based on load. Right-sizing is usually done periodically or on-demand, rather than continuously3.

References: 2: 4: 1: 5: 3: : page 42 : page 46

Which of the following is MOST likely to use a CDN?

Realty listing website

Video streaming service

Email service provider

Document management system

A CDN (content delivery network) is a network of distributed servers that store and deliver content to users based on their geographic location and network conditions. A CDN can improve the performance, availability, scalability, and security of web-based applications and services by reducing the latency, bandwidth, and load on the origin server. A CDN is most likely to be used by a video streaming service, which typically involves large amounts of data, high demand, and diverse audiences. A video streaming service can benefit from using a CDN by caching and streaming the video content from the nearest or best-performing server to the user, thus enhancing the user experience and reducing the cost and complexity of the service.

A realty listing website is a web-based application that allows users to search, view, and compare properties for sale or rent. A realty listing website may use a CDN to improve the performance and availability of the website, especially if it has a large number of images, videos, or other media files. However, a realty listing website is not as likely to use a CDN as a video streaming service, since the content is not as dynamic, the demand is not as high, and the audience is not as diverse.

An email service provider is a company that offers email hosting, management, and delivery services to users. An email service provider may use a CDN to improve the security and reliability of the email service, especially if it has a large number of users, messages, or attachments. However, an email service provider is not as likely to use a CDN as a video streaming service, since the content is not as public, the performance is not as critical, and the location is not as relevant.

A document management system is a software application that allows users to create, store, organize, and share documents. A document management system may use a CDN to improve the scalability and accessibility of the document storage and retrieval, especially if it has a large number of documents, users, or collaborators. However, a document management system is not as likely to use a CDN as a video streaming service, since the content is not as large, the demand is not as variable, and the audience is not as global. References: CompTIA Cloud Essentials+ CLO-002 Study Guide, page 165-166; CompTIA Cloud Essentials+ Certification Training, CertMaster Learn for Cloud Essentials+, Module 4: Management and Technical Operations, Lesson 4.2: Cloud Networking, Topic 4.2.4: Content Delivery Networks

A company's finance team is reporting increased cloud costs against the allocated cloud budget. Which of the following is the BEST approach to match some of the cloud operating costs with the appropriate departments?

Right-sizing

Scaling

Chargeback

Showback

Chargeback is the best approach to match some of the cloud operating costs with the appropriate departments. Chargeback is a process where the IT department bills each department for the amount of cloud resources they use, such as compute, storage, network, or software. Chargeback can help the company to allocate the cloud costs more accurately and fairly, as well as to encourage the departments to optimize their cloud consumption and reduce waste. Chargeback can also provide the company with more visibility and accountability of the cloud usage and spending across the organization12

Chargeback is different from showback, which is a process where the IT department shows each department the amount of cloud resources they use, but does not charge them for it. Showback can also help the company to increase the awareness and transparency of the cloud costs, but it may not have the same impact on the behavior and efficiency of the departments as chargeback12

Right-sizing and scaling are not approaches to match the cloud costs with the departments, but rather techniques to adjust the cloud resources to the actual demand and performance of the applications or services. Right-sizing and scaling can help the company to save money and improve the cloud utilization, but they do not address the issue of cost allocation or attribution34

References: CompTIA Cloud Essentials+ Certification Exam Objectives, CompTIA Cloud Essentials+ Study Guide, Chapter 2: Business Principles of Cloud Environments, IT Chargeback vs Showback: What’s The Difference?2, Cloud Essentials+ Certification Training

A business analyst at a large multinational organization has been tasked with checking to ensure an application adheres to GDPR rules. Which of the following topics would be BEST for the analyst to research?

Data integrity

Industry-based requirements

ISO certification

Regulatory concerns

Right-sizing compute resource instances is the process of matching instance types and sizes to workload performance and capacity requirements at the lowest possible cost. It’s also the process of identifying opportunities to eliminate or downsize instances without compromising capacity or other requirements, which results in lower costs and higher efficiency1. Right-sizing is a key mechanism for optimizing cloud costs, but it is often ignored or delayed by organizations when they first move to the cloud. They lift and shift their environments and expect to right-size later. Speed and performance are often prioritized over cost, which results in oversized instances and a lot of wasted spend on unused resources2.

Right-sizing compute resource instances is the best action that the analyst should consider to lower costs and improve efficiency, as it can help reduce the amount of resources and money spent on instances that operate at a fraction of the full processing capacity. Right-sizing can also improve the performance and reliability of the instances by ensuring that they have enough resources to meet the workload demands. Right-sizing is an ongoing process that requires continuous monitoring and analysis of the instance usage and performance metrics, as well as the use of tools and frameworks that can simplify and automate the right-sizing decisions1.

Consolidating into fewer instances, using spot instances, or negotiating better prices on the company’s reserved instances are not the best actions that the analyst should consider to lower costs and improve efficiency, as they have some limitations and trade-offs compared to right-sizing. Consolidating into fewer instances can reduce the number of instances, but it does not necessarily optimize the type and size of the instances. Consolidating can also introduce performance and availability issues, such as increased latency, reduced redundancy, or single points of failure3. Using spot instances can reduce the cost of instances, but it also introduces the risk of interruption and termination, as spot instances are subject to fluctuating prices and availability based on the supply and demand of the cloud provider4. Negotiating better prices on the company’s reserved instances can reduce the cost of instances, but it also requires a long-term commitment and upfront payment, which reduces the flexibility and scalability of the cloud environment5. References: Right Sizing - Cloud Computing Services; The 6-Step Guide To Rightsizing Your Instances - CloudZero; Consolidating Cloud Services: How to Do It Right | CloudHealth by VMware; Spot Instances - Amazon Elastic Compute Cloud; Reserved Instances - Amazon Elastic Compute Cloud.

Which of the following types of risk is MOST likely to be associated with moving all data to one cloud provider?

Vendor lock-in

Data portability

Network connectivity

Data sovereignty

Vendor lock-in is the type of risk that is most likely to be associated with moving all data to one cloud provider. Vendor lock-in refers to the situation where a customer is dependent on a particular vendor’s products and services to such an extent that switching to another vendor becomes difficult, time-consuming, or expensive. Vendor lock-in can limit the customer’s flexibility, choice, and control over their cloud environment, and expose them to potential issues such as price increases, service degradation, security breaches, or compliance violations. Vendor lock-in can also prevent the customer from taking advantage of new technologies, innovations, or opportunities offered by other vendors. Vendor lock-in can be caused by various factors, such as proprietary formats, standards, or protocols, lack of interoperability or compatibility, contractual obligations or penalties, or high switching costs12

References: CompTIA Cloud Essentials+ Certification Exam Objectives3, CompTIA Cloud Essentials+ Study Guide, Chapter 2: Business Principles of Cloud Environments2, Moving All Data to One Cloud Provider: Understanding Risks1

A company with critical resources in the cloud needs to ensure data is available in multiple datacenters around the world.

Which of the following BEST meets the company's needs?

Auto-scaling

Geo-redundancy

Disaster recovery

High availability

Geo-redundancy is the best option for a company that needs to ensure data availability in multiple datacenters around the world. Geo-redundancy is the duplication of IT infrastructure and data across geographically dispersed locations, such as different regions or continents1. Geo-redundancy provides several benefits for cloud-based applications, such as:

The other options are not as suitable as geo-redundancy for the company’s needs because:

References:

An analyst is reviewing a report on a company's cloud resource usage. The analyst has noticed many of the cloud instances operate at a fraction of the full processing capacity. Which of the following actions should the analyst consider to lower costs and improve efficiency?

Consolidating into fewer instances

Using spot instances

Right-sizing compute resource instances

Negotiating better prices on the company's reserved instances

Right-sizing compute resource instances is the process of matching instance types and sizes to workload performance and capacity requirements at the lowest possible cost. It’s also the process of identifying opportunities to eliminate or downsize instances without compromising capacity or other requirements, which results in lower costs and higher efficiency1. Right-sizing is a key mechanism for optimizing cloud costs, but it is often ignored or delayed by organizations when they first move to the cloud. They lift and shift their environments and expect to right-size later. Speed and performance are often prioritized over cost, which results in oversized instances and a lot of wasted spend on unused resources2.

Right-sizing compute resource instances is the best action that the analyst should consider to lower costs and improve efficiency, as it can help reduce the amount of resources and money spent on instances that operate at a fraction of the full processing capacity. Right-sizing can also improve the performance and reliability of the instances by ensuring that they have enough resources to meet the workload demands. Right-sizing is an ongoing process that requires continuous monitoring and analysis of the instance usage and performance metrics, as well as the use of tools and frameworks that can simplify and automate the right-sizing decisions1.

Consolidating into fewer instances, using spot instances, or negotiating better prices on the company’s reserved instances are not the best actions that the analyst should consider to lower costs and improve efficiency, as they have some limitations and trade-offs compared to right-sizing. Consolidating into fewer instances can reduce the number of instances, but it does not necessarily optimize the type and size of the instances. Consolidating can also introduce performance and availability issues, such as increased latency, reduced redundancy, or single points of failure3. Using spot instances can reduce the cost of instances, but it also introduces the risk of interruption and termination, as spot instances are subject to fluctuating prices and availability based on the supply and demand of the cloud provider4. Negotiating better prices on the company’s reserved instances can reduce the cost of instances, but it also requires a long-term commitment and upfront payment, which reduces the flexibility and scalability of the cloud environment5. References: Right Sizing - Cloud Computing Services; The 6-Step Guide To Rightsizing Your Instances - CloudZero; Consolidating Cloud Services: How to Do It Right | CloudHealth by VMware; Spot Instances - Amazon Elastic Compute Cloud; Reserved Instances - Amazon Elastic Compute Cloud.

A cloud administrator needs to enable users to access business applications remotely while ensuring these applications are only installed on company-controlled equipment. All users require the ability to modify personal working environments. Which of the following is the BEST solution?

SSO

VDI

SSH

VPN

The best solution for this scenario is Virtual Desktop Infrastructure (VDI). VDI is a cloud service model that provides users with virtual desktops that run on a centralized server. Users can access their virtual desktops remotely from any device, such as a laptop, tablet, or smartphone, using a thin client or a web browser. VDI enables users to access business applications without installing them on their own devices, which ensures that these applications are only installed on company-controlled equipment. VDI also allows users to modify their personal working environments, such as desktop settings, wallpapers, or preferences, which are saved on the server and persist across sessions. VDI can offer benefits such as improved security, reduced hardware costs, centralized management, and enhanced user experience. The other options are not the best solutions for this scenario. Single Sign-On (SSO) is a cloud service that enables users to log in to multiple applications or systems with one set of credentials, which simplifies authentication and improves security. However, SSO does not provide users with remote access to business applications or personal working environments. Secure Shell (SSH) is a network protocol that allows secure remote access to a server or a device using encryption and authentication. SSH can be used to execute commands, transfer files, or tunnel network traffic. However, SSH does not provide users with virtual desktops or business applications. Virtual Private Network (VPN) is a network technology that creates a secure connection between a remote device and a private network over a public network, such as the Internet. VPN can be used to access network resources, such as files, printers, or applications, that are not available otherwise. However, VPN does not provide users with virtual desktops or personal working environments. References: CompTIA Cloud Essentials+ Certification Study Guide, Second Edition (Exam CLO-002), Chapter 2: Cloud Concepts, Section 2.3: Cloud Service Models, p. 64-65

A business analyst is writing a disaster recovery strategy. Which of the following should the analyst include in the document? (Select THREE).

Capacity on demand

Backups

Resource tagging

Replication

Elasticity

Automation

Geo-redundancy

A disaster recovery strategy is a plan that defines how an organization can recover its data, systems, and operations in the event of a disaster, such as a natural calamity, a cyberattack, or a human error. A disaster recovery strategy should include the following elements12:

References: [CompTIA Cloud Essentials+ Certification Study Guide, Second Edition (Exam CLO-002)], Chapter 4: Risk Management, pages 105-106.

A large online car retailer needs to leverage the public cloud to host photos that must be accessible from anywhere and available at anytime. Which of the following cloud storage types would be cost-effective and meet the requirements?

Cold storage

File storage

Block storage

Object storage

Object storage is a cloud storage type that would be cost-effective and meet the requirements of a large online car retailer that needs to host photos that must be accessible from anywhere and available at anytime. Object storage is a type of cloud storage that stores data as objects, which consist of data, metadata, and a unique identifier. Object storage is ideal for storing large amounts of unstructured data, such as photos, videos, audio, documents, and web pages. Object storage offers several advantages for the online car retailer, such as:

References: CompTIA Cloud Essentials+ CLO-002 Study Guide, Chapter 2: Cloud Concepts, Section 2.2: Cloud Technologies, Page 55. What Is Cloud Storage? Definition, Types, Benefits, and Best Practices - Spiceworks1 What Is a Public Cloud? | Google Cloud2

A business analyst has been drafting a risk response for a vulnerability that was identified on a server. After considering the options, the analyst decides to decommission the server. Which of the following describes this approach?

Mitigation

Transference

Acceptance

Avoidance

Avoidance is a risk response strategy that involves eliminating the threat or uncertainty associated with a risk by removing the cause or the source of the risk. Avoidance can help to prevent the occurrence or the impact of a negative risk, but it may also result in the loss of potential opportunities or benefits. Avoidance is usually applied when the risk is too high or too costly to mitigate, transfer, or accept12

The business analyst is using the avoidance strategy by decommissioning the server that has a vulnerability. By doing so, the analyst is eliminating the possibility of the vulnerability being exploited or causing harm to the system or the data. However, the analyst is also losing the functionality or the value that the server provides, and may need to find an alternative solution or resource.

Mitigation is not the correct answer, because mitigation is a risk response strategy that involves reducing the probability or the impact of a negative risk by implementing actions or controls that can minimize or counteract the risk. Mitigation can help to lower the exposure or the severity of a risk, but it does not eliminate the risk completely. Mitigation is usually applied when the risk is moderate or manageable, and the cost of mitigation is justified by the potential benefit12

Transference is not the correct answer, because transference is a risk response strategy that involves shifting the responsibility or the impact of a negative risk to a third party, such as a vendor, a partner, or an insurer. Transference can help to share or distribute the risk, but it does not reduce or remove the risk. Transference is usually applied when the risk is beyond the control or the expertise of the organization, and the cost of transference is acceptable or affordable12

Acceptance is not the correct answer, because acceptance is a risk response strategy that involves acknowledging the existence or the possibility of a negative risk, and being prepared to deal with the consequences if the risk occurs. Acceptance can be passive, which means no action is taken to address the risk, or active, which means a contingency plan or a reserve is established to handle the risk. Acceptance is usually applied when the risk is low or inevitable, and the cost of avoidance, mitigation, or transference is higher than the cost of acceptance12

References: 1: 2: page 50

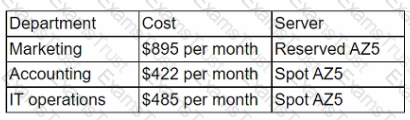

A large enterprise has the following invoicing breakdown of current cloud consumption spend:

The level of resources consumed by each department is relatively similar. Which of the following is MOST likely affecting monthly costs?

The servers in use by the marketing department are in an availability zone that is generally expensive.

The servers in use by the accounting and IT operations departments are in different geographic zones with lower pricing.

The accounting and IT operations departments are choosing to bid on non-committed resources.

The marketing department likely stores large media files on its servers, leading to increased storage costs.

The marketing department likely stores large media files on its servers, leading to increased storage costs. This is because the marketing department is responsible for creating and distributing various types of digital content, such as videos, images, podcasts, and webinars, to promote the products and services of the enterprise. These media files tend to be large in size and require more storage space than other types of data, such as text documents or spreadsheets. Therefore, the marketing department consumes more storage resources than the other departments, which increases the monthly cloud costs for the enterprise. References: CompTIA Cloud Essentials+ CLO-002 Study Guide, Chapter 3: Cloud Service and Delivery Models, Section 3.2: Cloud Storage, Page 97

A company decommissioned its testing environment. Which of the following should the company do FIRST to avoid charges?

Detach and delete disposable resources.

Empty the logging directory.

Delete infrastructure as code templates.

Disable alerts and alarms.

Disposable resources are cloud resources that are not needed for the long-term operation of an application or service, and can be easily created and deleted on-demand. Examples of disposable resources include temporary storage, spot instances, and disposable virtual machines. Disposable resources can help to reduce costs and improve flexibility, but they also incur charges when they are in use or attached to other resources. Therefore, when a company decommissioned its testing environment, it should detach and delete disposable resources first to avoid charges12

Emptying the logging directory is not the best option to avoid charges, because logging directory is a folder that stores the records of events and activities that occur in an application or service. Logging directory can help to monitor and troubleshoot the performance and issues of the application or service, but it does not directly affect the charges of the cloud resources. Emptying the logging directory may also result in the loss of valuable information and insights that can be used for future improvement or analysis.

Deleting infrastructure as code templates is not the best option to avoid charges, because infrastructure as code templates are files that define and configure the cloud resources using code or scripts. Infrastructure as code templates can help to automate and standardize the deployment and management of the cloud resources, but they do not directly affect the charges of the cloud resources. Deleting infrastructure as code templates may also make it harder to recreate or modify the cloud resources in the future.

Disabling alerts and alarms is not the best option to avoid charges, because alerts and alarms are notifications that are triggered by certain conditions or thresholds that are set for the cloud resources. Alerts and alarms can help to notify and respond to the changes or issues of the cloud resources, but they do not directly affect the charges of the cloud resources. Disabling alerts and alarms may also make it difficult to detect and resolve any problems or anomalies that may occur in the cloud resources.

References: 1: 2: page 46

Which of the following metrics defines how much data loss a company can tolerate?

RTO

TCO

MTTR

ROI

RPO

RPO stands for recovery point objective, which is the maximum amount of data loss that a company can tolerate in the event of a disaster, failure, or disruption. RPO is measured in time, from the point of the incident to the last valid backup of the data. RPO helps determine how frequently the company needs to back up its data and how much data it can afford to lose. For example, if a company has an RPO of one hour, it means that it can lose up to one hour’s worth of data without causing significant harm to the business. Therefore, it needs to back up its data at least every hour to meet its RPO.

RPO is different from other metrics such as RTO, TCO, MTTR, and ROI. RTO stands for recovery time objective, which is the maximum amount of time that a company can tolerate for restoring its data and resuming its normal operations after a disaster. TCO stands for total cost of ownership, which is the sum of all the costs associated with acquiring, maintaining, and operating a system or service over its lifetime. MTTR stands for mean time to repair, which is the average time that it takes to fix a faulty component or system. ROI stands for return on investment, which is the ratio of the net profit to the initial cost of a project or investment. References: Recovery Point Objective: A Critical Element of Data Recovery - G2, What is a Recovery Point Objective? RPO Definition + Examples, Cloud Computing Pricing Models - CompTIA Cloud Essentials+ (CLO-002) Cert Guide

A company’s current billing agreement is static. If the company were to migrate to an entirely IaaS-based setup, which of the following billing concepts would the company be adopting?

Enterprise agreement

Perpetual

Variable cost

Fixed cost

Variable cost is a billing concept that means the customer pays only for the resources they consume, and the cost varies depending on the usage. This is different from fixed cost, which means the customer pays a predetermined amount regardless of the usage. IaaS-based setups typically use variable cost billing, as the customer can provision and deprovision resources on demand, and only pay for what they use. This allows the customer to optimize their costs and scale their resources according to their needs123. References: [CompTIA Cloud Essentials+ Certification Study Guide, Second Edition (Exam CLO-002)], Chapter 1: Cloud Principles and Design, pages 17-18.

Which of the following cloud computing characteristics is a facilitator of a company's organizational agility?

Professional services

Managed services

Reduced time to market

Licensing model

Organizational agility is the ability of a company to quickly adapt to changing market and environmental conditions in a profitable and efficient way. Cloud computing can facilitate organizational agility by providing rapid provisioning of IT resources, scalability, flexibility, and cost-effectiveness. One of the benefits of cloud computing is reduced time to market, which means that a company can launch new products or services faster and more efficiently than its competitors. This can give the company a competitive edge and increase customer satisfaction. Reduced time to market is a characteristic of cloud computing that enables organizational agility, not a result of it. Therefore, it is the correct answer. References: Cloud Computing as a Drive for Strategic Agility in Organizations, How cloud computing can drive business agility, Agility on Cloud - A Vital Part of Cloud Computing

Which of the following security concerns is BEST addressed by moving systems to the cloud?

Availability

Authentication

Confidentiality

Integrity

Availability is the security concern that is best addressed by moving systems to the cloud. Availability refers to the ability of a system or service to be accessible and functional when needed by authorized users. Availability is one of the key benefits of cloud computing, as it provides high reliability, scalability, and performance for the cloud systems and services. Cloud providers use various techniques and technologies to ensure availability, such as:

Availability is different from other security concerns, such as authentication, confidentiality, or integrity. Authentication is the process of verifying the identity and credentials of a user or system before granting access to the cloud systems and services. Confidentiality is the process of protecting the data and information from unauthorized access or disclosure, such as by using encryption, access control, or data masking. Integrity is the process of ensuring the data and information are accurate, complete, and consistent, and have not been modified or corrupted by unauthorized or malicious parties, such as by using hashing, digital signatures, or checksums. References: Cloud Computing Availability - CompTIA Cloud Essentials+ (CLO-002) Cert Guide, Cloud Security – Amazon Web Services (AWS), Azure infrastructure availability - Azure security | Microsoft Learn, What is Cloud Security? Cloud Security Defined | IBM

A cloud administrator needs to ensure as much uptime as possible for an application. The application has two database servers. If both servers go down simultaneously, the application will go down. Which of the following must the administrator configure to ensure the CSP does not bring both servers down for maintenance at the same time?

Backups

Availability zones

Autoscaling

Replication

Availability zones are logical data centers within a cloud region that are isolated and independent from each other. Availability zones have their own power, cooling, and networking infrastructure, and are connected by low-latency networks. Availability zones help to ensure high availability and fault tolerance for cloud applications by allowing customers to deploy their resources across multiple zones within a region. If one availability zone experiences an outage or maintenance, the other zones can continue to operate and serve the application12

To ensure the CSP does not bring both servers down for maintenance at the same time, the cloud administrator must configure the application to use availability zones. The administrator can deploy the two database servers in different availability zones within the same region, and enable replication and synchronization between them. This way, the application can access either server in case one of them is unavailable due to maintenance or failure. The administrator can also use load balancers and health checks to distribute the traffic and monitor the status of the servers across the availability zones34

Backups are not the best option to ensure the CSP does not bring both servers down for maintenance at the same time, because backups are copies of data that are stored in another location for recovery purposes. Backups can help to restore the data in case of data loss or corruption, but they do not provide high availability or fault tolerance for the application. Backups are usually performed periodically or on-demand, rather than continuously. Backups also require additional storage space and bandwidth, and may incur additional costs.

Autoscaling is not the best option to ensure the CSP does not bring both servers down for maintenance at the same time, because autoscaling is a feature that allows customers to scale their cloud resources up or down automatically, based on predefined conditions such as traffic or utilization levels. Autoscaling can help to optimize the performance and costs of the application, but it does not guarantee high availability or fault tolerance for the application. Autoscaling may not be able to scale the resources fast enough to handle sudden spikes or drops in demand, and it may also introduce additional complexity and overhead for managing the resources.

Replication is not the best option to ensure the CSP does not bring both servers down for maintenance at the same time, because replication is a process of copying and synchronizing data across multiple locations or devices. Replication can help to improve the availability and consistency of the data, but it does not prevent the CSP from bringing both servers down for maintenance at the same time. Replication also depends on the availability and connectivity of the locations or devices where the data is replicated, and it may also increase the network traffic and storage requirements.

References: 1: 2: page 42 3: 4: : page 44 : page 46 : page 48

Which of the following BEST explains the concept of RTOs for restoring servers to operational use?

To reduce the amount of data loss that can occur in the event of a server failure

To ensure the restored server is available and operational within a given window of time

To ensure the data on the restored server is current within a given window of time

To reduce the amount of time a particular server is unavailable and offline

RTO stands for Recovery Time Objective, which is a metric that measures the maximum acceptable amount of time that an application or a service can be offline or unavailable after a disruption, such as a server failure, a power outage, or a natural disaster. RTO is a key indicator of the disaster recovery capabilities and objectives of an organization, as it reflects the level of tolerance or impact of downtime on the business operations, reputation, and revenue. RTO is usually expressed in hours, minutes, or seconds, and it can vary depending on the criticality and priority of the application or the service. RTO can help an organization to determine the optimal level of backup, redundancy, and recovery for the application or the service, as well as the potential costs and risks of downtime. RTO can also help the organization to choose the appropriate cloud service model, provider, and deployment option that can meet the disaster recovery requirements and expectations of the organization and its customers12

Therefore, the best explanation of the concept of RTOs for restoring servers to operational use is to reduce the amount of time a particular server is unavailable and offline, as this implies the goal of minimizing the duration and frequency of downtime, and restoring the normal operations and functionality of the server as quickly as possible.

References: CompTIA Cloud Essentials+ Certification Exam Objectives3, CompTIA Cloud Essentials+ Study Guide, Chapter 7: Cloud Security4, Cloud Essentials+ Certification Training

Which of the following is true about the use of technologies such as JSON and XML for cloud data interchange and automation tasks?

It can cause cloud vendor lock-in

The company needs to define a specific programming language for cloud management.

The same message format can be used across different cloud platforms.

It is considered an unsafe format of communication.

JSON and XML are both data serialization formats that allow you to exchange data across different applications, platforms, or systems in a standardized manner. They are independent of any programming language and can be used across different cloud platforms. They do not cause cloud vendor lock-in, as they are open and interoperable formats. They do not require the company to define a specific programming language for cloud management, as they can be parsed and processed by various languages. They are not considered unsafe formats of communication, as they can be encrypted and validated for security purposes. References: CompTIA Cloud Essentials+ Certification | CompTIA IT Certifications, CompTIA Cloud Essentials+, CompTIA Cloud Essentials CLO-002 Certification Study Guide

An organization has experienced repeated occurrences of system configurations becoming incorrect over time. After implementing corrections on all system configurations across the enterprise, the Chief Information Security Officer (CISO) purchased an automated tool that will monitor system configurations and identify any deviations. In the future, whic' if th following should be used to identify incorrectly configured systems?

Baseline

Gap analysis

Benchmark

Current and future requirements

A baseline is a standard or reference point that is used to measure and compare the current state of a system or process. A baseline can be established for various aspects of a system, such as performance, security, configuration, functionality, or quality. A baseline can help to identify deviations, anomalies, or changes that occur over time, and to evaluate the impact of those changes on the system or process. A baseline can also help to restore a system or process to its original or desired state, by providing a reference for corrective actions. In this case, the organization has experienced repeated occurrences of system configurations becoming incorrect over time, which can affect the security, reliability, and functionality of the systems. After implementing corrections on all system configurations across the enterprise, the CISO purchased an automated tool that will monitor system configurations and identify any deviations. In the future, the organization should use a baseline to identify incorrectly configured systems, by comparing the current system configurations with the baseline system configurations that were established after the corrections. A baseline can help the organization to detect and prevent configuration drift, which is the gradual but unintentional divergence of a system’s actual configuration settings from its secure baseline configuration. A baseline can also help the organization to apply configuration management, which is the process of planning, identifying, controlling, and verifying the configuration of a system or process throughout its lifecycle. References: CompTIA Cloud Essentials+ CLO-002 Study Guide, Chapter 4: Cloud Security, Section 4.2: Cloud Security Concepts, Page 153. What a Baseline Configuration Is and How to Prevent Configuration Drift - Netwrix1 Configuration Baselines - AcqNotes2

A web application was deployed, and files are available globally to improve user experience. Which of the following technologies is being used?

SAN

CDN

VDI

API

A content delivery network (CDN) is a technology that distributes web content across multiple servers in different geographic locations to improve user experience11. A CDN can be used to deploy web applications and files globally, so that users can access them faster and more reliably from their nearest server12. A CDN also reduces bandwidth consumption and network congestion by caching static content at the edge servers13.

References:

Which of the following DevOps options is used to integrate with cloud solutions?

Provisioning

API

SOA

Automation

API stands for Application Programming Interface, which is a set of rules and protocols that allow different software components or systems to communicate and exchange data. API is used to integrate with cloud solutions because it enables developers to access the cloud services and resources programmatically, without having to deal with the underlying infrastructure or platform details. API also allows for automation, scalability, and interoperability of cloud applications and services.

References: Chapter 3: Cloud Computing Concepts and Models, Section 3.2: Cloud Service Models, Subsection 3.2.1: Software as a Service (SaaS), Page 87; Chapter 4: Cloud Computing Principles and Design, Section 4.3: Cloud Characteristics and Risks, Subsection 4.3.2: Cloud Characteristics, Page 121; from or CompTIA Cloud Essentials+ sources.

A startup company wants to develop a new voice assistant that leverages technology that can improve its product based on end user input. Which of the following would MOST likely accomplish this goal?

Big Data

Blockchain

VDI

Machine learning

Machine learning is a technology that enables a voice assistant to improve its product based on end user input. Machine learning is a branch of artificial intelligence that allows systems to learn from data and experience, without being explicitly programmed. Machine learning can help a voice assistant to understand natural language, recognize speech, generate responses, and adapt to user feedback. Machine learning can also help a voice assistant to personalize its service, by learning the preferences, habits, and needs of each user. Machine learning can make a voice assistant more intelligent, accurate, and user-friendly over time. References: CompTIA Cloud Essentials+ CLO-002 Study Guide, Chapter 2: Cloud Concepts, Section 2.2: Cloud Technologies, Page 55.

Which of the following is a benefit of microservice applications in a cloud environment?

Microservices are dependent on external shared databases found on cloud solutions.

Federation is a mandatory component for an optimized microservice deployment.

The architecture of microservice applications allows the use of auto-scaling.

Microservice applications use orchestration solutions to update components in each service.

Microservice applications are composed of many smaller, loosely coupled, and independently deployable services, each with its own responsibility and technology stack1. One of the benefits of microservice applications in a cloud environment is that they can use auto-scaling, which is the ability to automatically adjust the amount of computing resources allocated to a service based on the current demand2. Auto-scaling can help improve the performance, availability, and cost-efficiency of microservice applications, as it allows each service to scale up or down according to its own needs, without affecting the rest of the application2. Auto-scaling can also help handle unpredictable or variable workloads, such as spikes in traffic or seasonal fluctuations2. Auto-scaling can be implemented using different cloud services, such as Google Kubernetes Engine (GKE) or Cloud Run, which provide both horizontal and vertical scaling options for microservice applications34. References: 1: IBM, What are Microservices?; 2: AWS, What is Auto Scaling?; 3: Google Cloud, Autoscaling Deployments; 4: Google Cloud, Scaling Cloud Run services

A company with a variable number of employees would make good use of the cloud model because of:

multifactor authentication

self-service

subscription services

collaboration

A company with a variable number of employees would make good use of the cloud model because of subscription services. Subscription services are a type of cloud pricing model that allows customers to pay a fixed fee for a certain amount of cloud resources or services for a specific period of time, such as monthly or annually. Subscription services can offer benefits such as predictable costs, scalability, flexibility, and reduced upfront investment. A company with a variable number of employees can use subscription services to adjust the cloud resources or services according to the changing demand and size of the workforce, without wasting money on unused capacity or paying extra fees for exceeding the limit. Subscription services can also enable the company to access the latest cloud technologies and features without having to purchase or maintain them. The other options are not the best reasons for a company with a variable number of employees to use the cloud model. Multifactor authentication is a security method that requires users to provide two or more pieces of evidence to verify their identity, such as a password, a code, or a biometric factor. Multifactor authentication can enhance the security of the cloud services, but it is not related to the number of employees. Self-service is a cloud characteristic that allows users to provision, manage, and terminate cloud resources or services on demand, without requiring the intervention of the cloud provider or the IT department. Self-service can improve the efficiency and agility of the cloud services, but it is not related to the number of employees. Collaboration is a cloud benefit that enables users to work together on projects, documents, or tasks using cloud-based tools and platforms, such as online file sharing, video conferencing, or project management. Collaboration can increase the productivity and innovation of the cloud services, but it is not related to the number of employees. References: CompTIA Cloud Essentials+ Certification Study Guide, Second Edition (Exam CLO-002), Chapter 1: Cloud Principles and Design, Section 1.2: Cloud Computing Concepts, p. 26-27.

A cloud administrator configures a server to insert an entry into a log file whenever an administrator logs in to the server remotely. Which of the following BEST describes the type of policy being used?

Audit

Authorization

Hardening

Access

An audit policy is a set of rules and guidelines that define how to monitor and record the activities and events that occur on a system or network1. An audit policy can help track and report the actions of users, applications, processes, or devices, and provide evidence of compliance, security, or performance issues. An audit policy can also help deter unauthorized or malicious activities, as the users know that their actions are being logged and reviewed.

A cloud administrator who configures a server to insert an entry into a log file whenever an administrator logs in to the server remotely is using an audit policy, as they are enabling the collection and recording of a specific event that relates to the access and management of the server. The log file can then be used to verify the identity, time, and frequency of the administrator logins, and to detect any anomalies or suspicious activities.

An authorization policy is a set of rules and guidelines that define what actions or resources a user or a system can access or perform2. An authorization policy can help enforce the principle of least privilege, which means that users or systems are only granted the minimum level of access or permissions they need to perform their tasks. An authorization policy can also help prevent unauthorized or malicious activities, as the users or systems are restricted from accessing or performing actions that are not allowed or necessary.

A hardening policy is a set of rules and guidelines that define how to reduce the attack surface and vulnerability of a system or network3. A hardening policy can help improve the security and resilience of a system or network, by applying various measures such as disabling unnecessary services, removing default accounts, applying patches and updates, configuring firewalls and antivirus software, etc. A hardening policy can also help prevent unauthorized or malicious activities, as the users or systems are faced with more obstacles and challenges to compromise the system or network.

An access policy is a set of rules and guidelines that define who or what can access a system or network, and under what conditions or circumstances4. An access policy can help control the authentication and identification of users or systems, and the verification and validation of their credentials. An access policy can also help prevent unauthorized or malicious activities, as the users or systems are required to prove their identity and legitimacy before accessing the system or network. References: CompTIA Cloud Essentials+ CLO-002 Study Guide, Chapter 6: Cloud Service Management, pages 229-230.

A SaaS provider specifies in a user agreement that the customer agrees that any misuse of the service will be the responsibility of the customer. Which of the following risk response methods was applied?

Acceptance

Avoidance

Transference

Mitigation

Transference is a risk response method that involves shifting the responsibility or impact of a risk to a third party3. Transference does not eliminate the risk, but it reduces the exposure or liability of the original party. A common example of transference is insurance, where the risk is transferred to the insurer in exchange for a premium4. In this case, the SaaS provider transfers the risk of misuse of the service to the customer by specifying it in the user agreement.

References:

Which of the following risks is MOST likely a result of vendor lock-in?

Premature obsolescence

Data portability issues

External breach

Greater system vulnerability

Data portability is the ability to move data from one cloud service provider to another without losing functionality, quality, or security. Vendor lock-in is a situation where a customer becomes dependent on a particular cloud service provider and faces high switching costs, lack of interoperability, and contractual obligations. Vendor lock-in can result in data portability issues, as the customer may have difficulty transferring their data to a different cloud service provider if they are dissatisfied with the current one or want to take advantage of better offers. Data portability issues can affect the customer’s flexibility, agility, and cost-efficiency in the cloud123. References: CompTIA Cloud Essentials+ Certification Study Guide, Second Edition (Exam CLO-002), Chapter 1: Cloud Principles and Design, pages 19-20.

Which of the following is the result of performing a physical-to-virtual migration of desktop workstations?

SaaS

laaS

VDI

VPN

VDI, or Virtual Desktop Infrastructure, is the result of performing a physical-to-virtual migration of desktop workstations. VDI is a technology that allows users to access and run desktop operating systems and applications from a centralized server in a data center or a cloud, instead of from a physical machine on their premises. VDI provides users with virtual desktops that are delivered over a network to various devices, such as laptops, tablets, or thin clients1. VDI offers several benefits, such as improved security, reduced costs, increased flexibility, and enhanced performance2.

SaaS, or Software as a Service, is not the result of performing a physical-to-virtual migration of desktop workstations, but a cloud service model that provides ready-to-use software applications that run on the cloud provider’s infrastructure and are accessed via a web browser or an API3. SaaS does not involve migrating desktop workstations, but using software applications that are hosted and managed by the cloud provider.

IaaS, or Infrastructure as a Service, is not the result of performing a physical-to-virtual migration of desktop workstations, but a cloud service model that provides access to basic computing resources, such as servers, storage, network, and virtualization, that are hosted on the cloud provider’s data centers and are rented on-demand. IaaS does not involve migrating desktop workstations, but renting infrastructure resources that can be used to host various workloads.

VPN, or Virtual Private Network, is not the result of performing a physical-to-virtual migration of desktop workstations, but a technology that creates a secure and encrypted connection between a device and a network over the internet. VPN does not involve migrating desktop workstations, but connecting to a network that can provide access to remote resources or services. References: What is VDI? Virtual Desktop Infrastructure Definition - VMware; VDI Benefits: 7 Advantages of Virtual Desktop Infrastructure; What is SaaS? Software as a service | Microsoft Azure; [What is IaaS? Infrastructure as a service | Microsoft Azure]; [What is a VPN? | HowStuffWorks].

A company is planning to use cloud computing to extend the compute resources that will run a new resource- intensive application. A direct deployment to the cloud would cause unexpected billing. Which of the following must be generated while the application is running on-premises to predict the cloud budget for this project better?

Proof or concept

Benchmark

Baseline

Feasibility study

A baseline is a snapshot of the current state of a system or an environment that serves as a reference point for future comparisons. A baseline can capture various aspects of a system, such as performance, cost, configuration, and resource utilization. By generating a baseline while the application is running on-premises, the company can better predict the cloud budget for the project by estimating the cloud resources and services that would match or exceed the baseline values. A baseline can also help the company to monitor and optimize the cloud deployment and identify any anomalies or deviations from the expected behavior. References: CompTIA Cloud Essentials+ CLO-002 Study Guide, Chapter 5: Cloud Migration, page 1971; Addressing Cloud Security with Infrastructure Baselines - Fugue2

Which of the following are considered secure access types of hosts in the cloud? (Choose two.)

HTTPS

HTTP

SSH

Telnet

RDP

FTP

HTTPS and SSH are considered secure access types of hosts in the cloud because they use encryption and authentication to protect the data and the identity of the users. HTTPS is a protocol that uses SSL or TLS to encrypt the communication between a web browser and a web server. SSH is a protocol that allows secure remote login and file transfer over a network. Both HTTPS and SSH prevent unauthorized access, eavesdropping, and tampering of the data in transit. References: CompTIA Cloud Essentials+ Certification Study Guide, Second Edition (Exam CLO-002), Chapter 3: Security in the Cloud, pages 83-84.

Which of the following is a valid mechanism for achieving interoperability when extracting and pooling data among different CSPs?

Use continuous integration/continuous delivery.

Recommend the use of the same CLI client.

Deploy regression testing to validate pooled data.

Adopt the use of communication via APIs.

APIs (application programming interfaces) are sets of rules and protocols that enable communication and data exchange between different applications or systems. APIs can facilitate interoperability when extracting and pooling data among different CSPs (cloud service providers) by allowing standardized and secure access to the data sources and services offered by each CSP. APIs can also enable automation, scalability, and customization of cloud solutions. References: CompTIA Cloud Essentials+ CLO-002 Study Guide, page 163; CompTIA Cloud Essentials+ Certification Training, CertMaster Learn for Cloud Essentials+, Module 4: Management and Technical Operations, Lesson 4.3: DevOps in the Cloud, Topic 4.3.1: API Integration

Which of the following would BEST provide access to a Windows VDI?

RDP

VPN

SSH

HTTPS

RDP stands for Remote Desktop Protocol, which is a protocol that allows a user to remotely access and control a Windows-based computer or virtual desktop from another device over a network. RDP can be used to provide access to a Windows VDI, which is a virtual desktop infrastructure that delivers Windows desktops and applications as a cloud service. RDP can provide a full graphical user interface, keyboard, mouse, and audio support, as well as features such as clipboard sharing, printer redirection, and file transfer. RDP can be accessed by using the built-in Remote Desktop Connection client in Windows, or by using third-party applications or web browsers. RDP is more suitable for accessing a Windows VDI than other protocols, such as VPN, SSH, or HTTPS, which may not support the same level of functionality, performance, or security. References: CompTIA Cloud Essentials+ Certification Exam Objectives1, CompTIA Cloud Essentials+ Study Guide, Chapter 6: Cloud Connectivity and Load Balancing2, How To Use The Remote Desktop Protocol To Connect To A Linux Server1