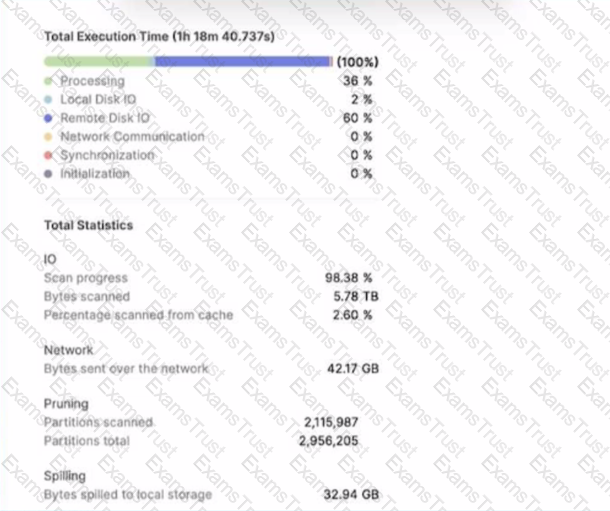

A large table with 200 columns contains two years of historical data. When queried. the table is filtered on a single day Below is the Query Profile:

Using a size 2XL virtual warehouse, this query look over an hour to complete

What will improve the query performance the MOST?

What are characteristics of Snowpark Python packages? (Select THREE).

Third-party packages can be registered as a dependency to the Snowpark session using the session, import () method.

Which functions will compute a 'fingerprint' over an entire table, query result, or window to quickly detect changes to table contents or query results? (Select TWO).

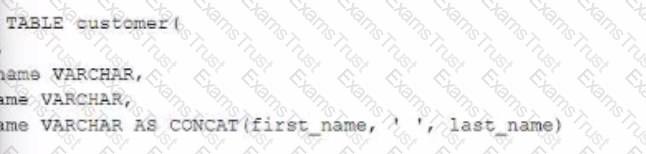

A new customer table is created by a data pipeline in a Snowflake schema where MANAGED ACCESSenabled.

…. Can gran access to the CUSTOMER table? (Select THREE.)

A Data Engineer is working on a continuous data pipeline which receives data from Amazon Kinesis Firehose and loads the data into a staging table which will later be used in the data transformation process The average file size is 300-500 MB.

The Engineer needs to ensure that Snowpipe is performant while minimizing costs.

How can this be achieved?

A Data Engineer has written a stored procedure that will run with caller's rights. The Engineer has granted ROLEA right to use this stored procedure.

What is a characteristic of the stored procedure being called using ROLEA?

A Data Engineer needs to ingest invoice data in PDF format into Snowflake so that the data can be queried and used in a forecasting solution.

..... recommended way to ingest this data?

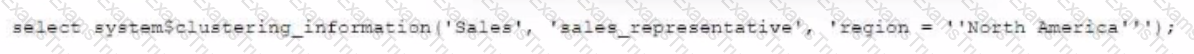

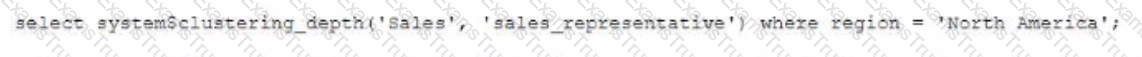

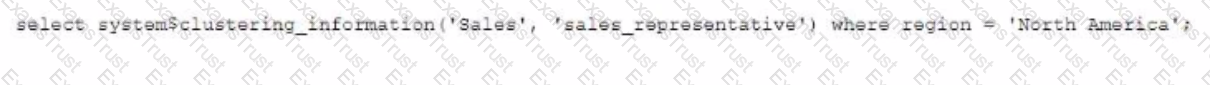

Given the table sales which has a clustering key of column CLOSED_DATE which table function will return the average clustering depth for the SALES_REPRESENTATIVEcolumn for the North American region?

A)

B)

C)

D)

Company A and Company B both have Snowflake accounts. Company A's account is hosted on a different cloud provider and region than Company B's account Companies A and B are not in the same Snowflake organization.

How can Company A share data with Company B? (Select TWO).

A Data Engineer ran a stored procedure containing various transactions During the execution, the session abruptly disconnected preventing one transactionfrom committing or rolling hark.The transaction was left in a detached state and created a lock on resources

...must the Engineer take to immediately run a new transaction?

A stream called TRANSACTIONS_STM is created on top of a transactions table in a continuous pipeline running in Snowflake. After a couple of months, the TRANSACTIONS table is renamed transactiok3_raw to comply with new naming standards

What will happen to the TRANSACTIONS _STM object?

Assuming that the session parameter USE_CACHED_RESULT is set to false, what are characteristics of Snowflake virtual warehouses in terms of the use of Snowpark?

A company is using Snowpipe to bring in millions of rows every day of Change Data Capture (CDC) into a Snowflake staging table on a real-time basis The CDC needs to get processedand combined with other data in Snowflake and land in a final table as part of the full data pipeline.

How can a Data engineer MOST efficiently process the incoming CDC on an ongoing basis?

A Data Engineer executes a complex query and wants to make use of Snowflake s query results caching capabilities to reuse the results.

Which conditions must be met? (Select THREE).

A Data Engineer is building a pipeline to transform a 1 TD tab e by joining it with supplemental tables The Engineer is applying filters and several aggregations leveraging Common TableExpressions (CTEs) using a size Medium virtual warehouse in a single query in Snowflake.

After checking the Query Profile, what is the recommended approach to MAXIMIZE performance of this query if the Profile shows data spillage?

A company built a sales reporting system with Python, connecting to Snowflake using the Python Connector. Based on the user's selections, the system generates the SQL queries needed to fetch the data for the report First it gets the customers that meet the given query parameters (on average 1000 customer records for each report run) and then it loops the customer records sequentially Inside that loop it runs the generated SQL clause for the current customer to get the detailed data for that customer number from the sales data table

When the Data Engineer tested the individual SQL clauses they were fast enough (1 second to get the customers 0 5 second to get the sales data for one customer) but the total runtime of the report is too long

How can this situation be improved?

Which methods will trigger an action that will evaluate a DataFrame? (Select TWO)

A Data Engineer needs to load JSON output from some software into Snowflake using Snowpipe.

Which recommendations apply to this scenario? (Select THREE)

A Data Engineer defines the following masking policy:

….

must be applied to the full_name column in the customer table:

Which query will apply the masking policy on the full_name column?